LinguaAI: AI-Powered Personalized Conversations for Comprehensive Language Learning

Beyond Traditional Learning: The GPT-4 Edge in Adaptive Language Tutoring for any Language and any Level.

As the accessibility of Large Language Models (LLMs) via APIs surges, a proliferation of opportunities for AI-centric innovations becomes evident. Answering this call is the nascent discipline of prompt engineering, distinguishing itself from the more resource-intensive fine-tuning of LLMs. Prompt engineering demands less in terms of data, resources, and deep model development expertise, facilitating the swift deployment of robust production-grade solutions.

The project is open-sourced and available on GitHub.

Prompt engineering

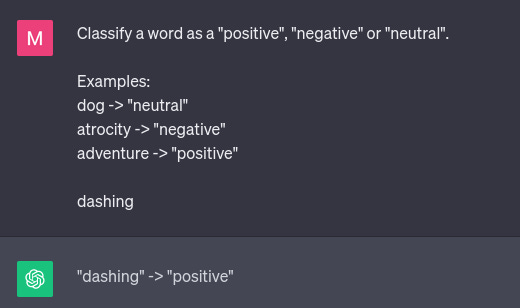

Prompt engineering has been applied to various tasks, from arithmetic reasoning to domain-specific question answering. At its most fundamental level, a prompt is the input to the language model that shapes the response of the model. For instance, the input “In the afternoon it will …” yields a response such as “In the afternoon, it will typically become warmer and brighter as the day progresses, assuming it's a typical sunny day. However, the specific weather conditions can vary depending on your location, time of year, and local climate patterns.” In the same vein, prompts can serve various purposes, including facilitating question answering (Figure 1) and fostering creativity in tasks like crafting poems (Figure 2).

Figure 1: Question answering task using ChatGPT

Figure 2: Creative writing task using ChatGPT

As task intricacy escalates, there's a commensurate increase in prompt complexity. This frequently necessitates task decomposition, nuanced contextualization, and the meticulous selection of phrasing within the prompt.

Several techniques for prompt engineering leverage the well-known capabilities of deep neural networks, such as zero-shot and few-shot learning. Zero-shot learning is the ability of deep networks to produce correct solutions for unseen tasks. In the realm of prompt engineering, this entails providing a task description and a solitary input example to solicit the desired output. For more intricate tasks, though, significant enhancements in model performance can be achieved by furnishing a small set of input-output examples, thereby creating a few-shot learning scenario. Figures 2 and 3 illustrate concrete instances of zero-shot and few-shot learning in action.

Figure 3: Zero-shot learning task with ChatGPT

Figure 4: Few-shot learning task with ChatGPT

Overall, when developing quality prompts, there is no one-size-fits-all solution. Crafting a high-quality prompt is a dynamic and iterative process tailored to specific use cases. Nevertheless, there have been efforts to formulate and systemise effective prompt creation methods, resulting in the formulation of prompt patterns, a prompt engineering equivalent of design patterns.

Application of prompt engineering to a personalised language learning task

This article delves into prompt engineering techniques for the development of a language learning chatbot powered by GPT models. The objective is to create a highly adaptable chatbot tailored for language learning and practice via prompt engineering. Users should have the flexibility to select their desired language, language proficiency level, and the context in which they want to engage with the chatbot. These three key components collectively shape the conversational context. The ultimate goal is for the chatbot to seamlessly generate coherent and contextually appropriate conversations that align with the chosen language proficiency level. Illustrative conversations conducted with the LinguaAI chatbot can be found in Figures 5 and 6.

Figure 5: Conversation with LinguaAI “In a restaurant”. The conversation is carried out in English on B2 level.

Figure 6: Conversation with LinguaAI “In a restaurant”. The conversation is carried out in French on A2 level.

Before going into detail about implementation methods, it is imperative to underscore the core objectives and essential attributes that we seek to achieve with our solution. The fundamental requirement is that the conversation follows the prescribed dialogue format, yielding a single, grammatically correct response per chatbot prompt. We'll refer to these as "format requirements" henceforth. Furthermore, we wish the chatbot to possess common language skills such as fluency and coherence and we wish the language proficiency level to be adhered to. Lastly, the chatbot should be able to embody its designated role effectively, employing common phrases, vocabulary, and a speech style befitting the assigned persona or context.

Taking into account the qualities of an effective language-learning chatbot as outlined above, the proposed solution comprises the following key subtasks:

Asserting user inputs

Inferring conversation roles from the setting description

Main chatbot dialogue generation

Chatbot response refinements

The LinguaAI chatbot architecture, outlined in Figure 7, consists of several components, each responsible for carrying out one of the aforementioned subtasks.

Figure 7. LinguaAI chatbot architecture

Asserting user inputs

Given the open-ended nature of the user's description of the conversational context, it is essential to verify its suitability and clarity before moving forward. To address this, the solution involves the implementation of a straightforward binary classifier integrated with a Large Language Model (LLM). In this setup, an output of "1" signifies that the input qualifies as a situation, place, or activity description, typically involving two interlocutors, while an output of "0" indicates that it does not meet these criteria.

Role inference

Once the user input has been validated, the context is then passed to the role inference model. The purpose of this step is to enhance the natural flow of the dialogue and facilitate understanding of the task for both the model and the user. This is achieved by inferring two distinct, natural roles, representing the participants in the discussion tailored to the given context. The role designated to guide the conversation is assigned to the chatbot, while the other role is allocated to the user. This allocation ensures a more coherent and intuitive interaction.

Main chatbot dialogue generation

After role inference, we have all relevant information at our disposal and we are able to commence the dialogue. To initiate the dialogue, a zero-shot task definition is used as a prompt. The information included in the prompt is the full conversation context, the Persona Pattern instructions, the CEFR language level definition and the format guidelines. Every subsequent prompt is expected to be a user’s message. In response, the chatbot generates a single conversational line as its reply.

LLM response refinement

Following the initial response generated by the primary chatbot, a series of targeted refinements is applied to the response. This iterative refinement process is motivated by the fact that it results in improved performance of Large Language Models (LLMs) compared to the baseline zero-shot approach.

The goal of individual refinements is to ensure the outlined properties of a good language-learning chatbot are met. The first refinement in the pipeline is designed to confirm that the assigned role is adhered to, while the subsequent refinement focuses on ensuring that the appropriate language proficiency level is maintained.

We have observed that common language skills such as fluency and coherence and the format are satisfied for the vast majority of text produced by the model, so no refinement was performed for this purpose.

The fundamental role fitness and language level correlation

Our refinements are primarily directed at two crucial properties: language proficiency level and role fitness. It's essential to emphasise the inverse relationship between these two properties. We prioritise language proficiency level because it forms the foundation of the language learning process, and in most cases, generic roles do not significantly suffer as a result. However, for more specific roles, such as mimicking a particular individual, there may be a trade-off where some nuances in style and tone of the text could be sacrificed in favour of maintaining the desired language proficiency level.

Refinement prompts alternative evaluation

Recognizing that the input space for a refinement pipeline is virtually limitless and that there are multiple equally valid prompt solutions, we found that simply experimenting with prompts to assess refinement quality was no longer sufficient. Our objective was to demonstrate that refining responses genuinely enhances their quality in terms of role fitness and language proficiency level matching.

To achieve this, we created a dataset that consists of 18 dialogues, with three dialogues for each CEFR language level, for each refinement prompt alternative. These datasets were automatically generated by supplying a chatbot without a refinement pipeline to act as a user. The evaluation process involved the use of two evaluation bots developed through prompt engineering. Each message generated from the assessed prompt received a score, and the scores were then averaged for each conversation. This approach allowed for a more comprehensive and objective assessment of refinement quality. The results somewhat differed depending on the GPT model used.

When dealing with GPT-4, it was observed that all refinements and initial responses exhibited a near-perfect language proficiency level. However, refinements introduced only moderate improvements in terms of role fitness.

On the other hand, with GPT-3.5-turbo, refinements led to significant enhancements in both language proficiency and role fitness criteria. Nevertheless, GPT-3.5-turbo encountered difficulties in maintaining text simplicity for the A1 and A2 language levels. To address this issue, a text simplification refinement bot was added to the end of the pipeline. This bot is designed to activate exclusively for A1 and A2 language levels, ensuring that the text remains basic and easy to understand for users at these proficiency levels.

Challenges Encountered During the Development Process

Fine-tuning the temperature setting to suit the specific task at hand is a common practice when working with Large Language Models (LLMs). However, when adjusting the temperature, we encountered a dual effect. Increasing the temperature elevates the likelihood of format requirements not being met, but it also introduces more variability in the chatbot's responses. This variability allows two dialogues with identical context to evolve in various directions, which is valuable for the language learning process.

In the end, we opted for a moderate temperature setting for the model. It's important to note that this issue was more pronounced with GPT-3.5 compared to GPT-4.

Furthermore, it was challenging to develop an evaluation process for prompt performance. To automate the score assignment process, the quality of evaluation bots had to be manually checked beforehand. This was performed using small manually crafted datasets targeting the edge cases. Given that a certain lack of trust should be present for such manual construction, the results of the evaluation process have been taken with a grain of salt. Several best-performing prompt alternatives have been further examined in a real-use setting before making small adjustments to prompts and selecting the most effective one.

Future developments

There are several ways to extend the functionality of the chatbot and improve its user utility for language learning.

Feedback constitutes a foundational stage in the learning process. User messages can be assessed and constructive feedback can be generated to enhance the user's experience.

Speaking and listening are crucial aspects of learning a foreign language because they help learners develop their oral communication skills and fluency, enabling them to engage in real-life conversations and effectively convey their thoughts and ideas. These skills also enhance comprehension, pronunciation, and cultural understanding, making the language learning experience more immersive and practical. Therefore, to further enhance LinguaAI, it's essential to incorporate the ability to record and listen to messages, coupled with robust text-to-speech and speech-to-text technologies.

The current refinement process involves initiating an API call for each refinement, leading to slower response times for users. It is imperative to explore strategies that can enhance response speed, either by minimising the frequency of API calls or by considering alternative approaches.

Adjusting the temperature parameter is essential to influence the diverse characteristics of the generated responses.

Key takeaways

Prompt engineering with LLMs such as GPT has allowed us to quickly develop complex AI based solutions. Building a customizable chatbot for language learning relies on several different prompt engineering approaches, from zero-shot learning to iterative refinements. The development process involves user input assessment, role inference, main chatbot dialogue generation, and response refinements. Notably, the interplay between language level and role fitness is crucial and adjustments in temperature settings influence the chatbot's behaviour. To enhance the language learning experience for users, it's beneficial to expand LinguaAI with feedback, progress tracking and audio capabilities. These features can provide users with valuable insights into their language learning journey and help them make meaningful improvements over time.