Named Entity Recognition Using a Pre-Trained LayoutLMv2 Model

The amount of data generated by humans is ever-increasing, much of which is stored in documents and forms that need to be analyzed to extract relevant information. Today, many companies still extract data through manual effort, which is often a tedious and time-consuming task. Rule-based systems are sometimes used, but they require careful engineering and tend to struggle if the environment changes, leading to costly algorithm adjustments. Recently, with the rapid advancement of NLP fueled by the success of deep learning, neural networks have been recognized as a potential new approach for automatic document processing. Soon after, LSTMs and transformers began to outperform humans in document understanding and data extraction, especially when speed and price are taken into consideration. However, since standard language models work with only word sequences, they miss out on a lot of information stored in the visual aspects of documents, such as their layout. This makes sense to us as humans. For example, imagine reading C code purely as a sequence of symbols:

void swap(int* xp, int* yp){int temp = *xp;*xp = *yp;*yp = temp;}void bubbleSort(int arr[], int n){int i, j; for (i = 0; i < n - 1; i++) for (j = 0; j < n -i -1;j++) if (arr[j] > arr[j + 1]) swap(&arr[j], &arr[j + 1]);}A disaster! In contrast, here is the same code, properly formatted;

void swap(int* xp, int* yp){

int temp = *xp;

*xp = *yp;

*yp = temp;

}

void bubbleSort(int arr[], int n){

int i, j;

for (i = 0; i < n - 1; i++)

for (j = 0; j < n - i - 1; j++)

if (arr[j] > arr[j + 1])

swap(&arr[j], &arr[j + 1]);

}Obviously, understanding formatted code is much easier. With a similar line of thought in mind, researchers at Microsoft developed layout language models (LayoutLMs). LayoutLMs are transformer-based models which, alongside the text, additionally have access to the layout of the document, meaning access to the document's image and the bounding boxes of every word. At the time of writing, three major LayoutLM models had been released: LayoutLM, LayoutLMv2 and LayoutLMv3, and all of them achieved state-of-the-art performance on a variety of visually-rich document understanding tasks.

The Model: LayoutLMv2/LayoutXLM

In 2020, one year after the release of the original LayoutLM paper, a paper describing LayoutLMv2 was published. The two models have similar architectures, both using three types of embeddings: text, layout and visual embeddings. Depending on the model version, the embeddings come in different flavours, but generally, these are their descriptions:

text embedding: fixed-length vectorized representation of tokenized text,

layout embedding: axis-aligned bounding boxes of each token and

visual embedding: page region image encoded into a fixed-length sequence by a CNN-based visual encoder. The regions correspond to token bounding boxes from the layout embeddings.

For each token, the three embeddings are concatenated to a single vector and forwarded to the following layer.

The major difference between the two models is that LayoutLMv2 had pre-training objectives which used all embedding types, while LayoutLM didn't use visual embeddings until fine-tuning. This helped LayoutLMv2 to better learn cross-modality interactions between visual and textual information, leading to an increase in performance.

The original LayoutLMv2 was pre-trained on English documents and thus could not be used on the provided dataset of documents in German. Luckily, LayoutXLM, a multilingual version of the LayoutLMv2 exists. This model has the same architecture and pre-training objectives but is pre-trained on multilingual documents.

More detailed descriptions of LayoutLMs can be found in their respective papers, linked in the references section.

Dataset

A dataset of invoice documents is used to fine-tune the model. As previously mentioned, the documents are in German. The raw dataset consists of PDFs and their OCR scans in JSON format. The scans include bounding box information for each recognized word. Since LayoutLMv2/XLM can only take one page at a time, multi-page documents were split so that each sample consists of one page. OCR scan files contained a lot of data irrelevant to the LayoutXLM, so a script was written to extract the important data and arrange it in CSV format. This simplifies and reduces the duration of data loading. PDFs are converted to PNG files and resized to 224x224 (dimension stated in the paper). A dummy excerpt of a CSV and a document image follow:

word, x0, y0, x1, y1, label

Bei, 45, 64, 65, 76, ZERO

der, 67, 64, 101, 76, ZERO

Westschmiede, 104, 64, 121, 76, SUPPLIER

in, 123, 64, 182, 76, ZERO

Düsseldorf, 184, 64, 229, 76, CITY

During preprocessing, several issues were detected:

LayoutXLM was pre-trained using a maximum sequence length of 512. Longer examples were truncated.

Some bounding box coordinates were either missing or out of bounds. Since this was not a common occurrence, the corrupted coordinates were set to their corresponding page corner.

Some OCR scans could not be matched with PDF files, probably because of typos in file names or different naming conventions. Since a large majority of samples could be matched, removing non-matches was deemed to be the best solution.

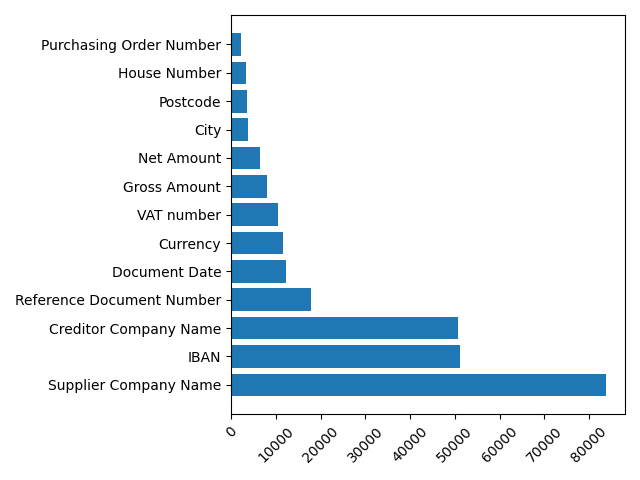

Preprocessing yielded 88.5k samples, with a predetermined 70.8k/17.7k train/evaluation split. The total number of tokens is 4.4 million. Excluding the "0" entity, there are 14 entity types, shown below:

Training and Error Evaluation

AdamW optimizer is used alongside a linear learning rate scheduler with a learning rate of 5x10^-5 and a warmup of 0.1. With 8 GB of GPU RAM, a batch size of 4 could be achieved. To compensate for the small batch size, 8 gradient accumulation steps were done between each parameter update, making the effective batch size 32.

The model is evaluated after each epoch. The best model was determined by measuring the overall macro F1-score. The model was trained for a total of 12 epochs using a GeForce GTX 1080, amounting to 53 hours of training time.

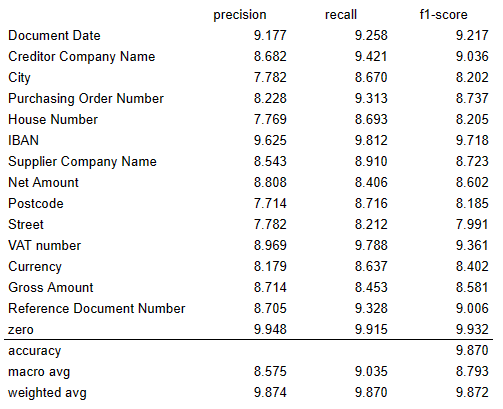

The model gave the best results on the evaluation split after 6 epochs, reaching a 0.88 f1-score.

LayoutXLM

The model achieves slightly better results compared to a BERT baseline:

BERT

Table 2: BERT results

A few things should be noted.

The similar performances of BERT and LayoutXLM may indicate that the visual information in this particular document dataset was insufficient to make a difference.

Since LayoutXLM works only with single-page samples, splitting multi-page documents might lead to a loss of valuable context. In contrast, BERT used multi-pagers as a single sample. Perhaps LayoutXLM could compare more favourably to BERT if the dataset consisted only of single-page documents.

BERTs classification scores are the result of grid search hyperparameter optimization, whereas LayoutXLM was trained with a cherry-picked hyperparameter set. A more extensive hyperparameter search could hopefully lead to even better results.

8.7k tokens were falsely classified while having a confidence score of 0.98 or higher. In almost all of these instances, the model classified an entity token as a non-entity and vice-versa, as opposed to misinterpreting one entity for some other. It was suggested that these high-confidence false classifications are the result of poor annotation. More work should be done to analyze annotation quality.

Conclusion

LayoutXLM, a multilingual version of the LayoutLMv2 model was trained on an in-house dataset of German invoices. The model outperforms a BERT baseline by a slight margin, despite BERT being optimized with a robust hyperparameter search. Several roads could be pursued in future work:

Analysis of the invoice dataset annotation quality and its impact on model performance.

Hyperparameter search optimization for LayoutXLM.

Comparison with BERT on different, perhaps more visually-rich document datasets.

References

LayoutLM: Pre-training of Text and Layout for Document Image Understanding

LayoutLMv2: Multi-modal Pre-training for Visually-Rich Document Understanding

LayoutXLM: Multimodal Pre-training for Multilingual Visually-rich Document Understanding

LayoutLMv3: Pre-training for Document AI with Unified Text and Image Masking